Web Scraper Integration Guide

Introduction

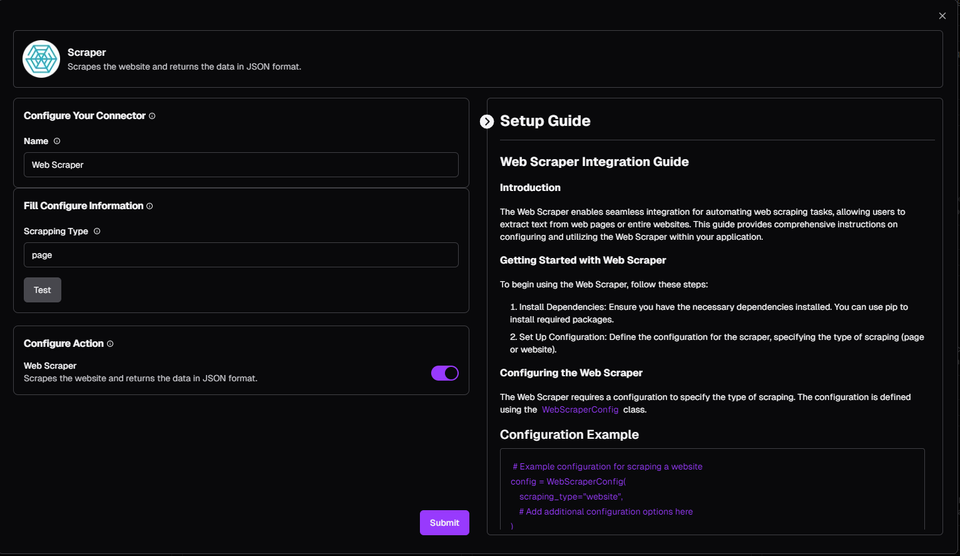

The Web Scraper enables seamless integration for automating web scraping tasks, allowing users to extract text from web pages or entire websites. This guide provides comprehensive instructions on configuring and utilizing the Web Scraper within your application.

Getting Started with Web Scraper

To begin using the Web Scraper, follow these steps:

- Install Dependencies: Ensure you have the necessary dependencies installed. You can use pip to install required packages.

- Set Up Configuration: Define the configuration for the scraper, specifying the type of scraping (page or website).

Configuring the Web Scraper

The Web Scraper requires a configuration to specify the type of scraping. The configuration is defined using the WebScraperConfig class.

Configuration Example

# Example configuration for scraping a website

config = WebScraperConfig(

scraping_type="website",

# Add additional configuration options here

)Utilizing the Web Scraper

The Web Scraper supports various functionalities, including scraping text from a specified URL.

Actions

scrape

- Inputs: website ur

- Outputs: scraped data

Scraping Text

Scrape Text: Extract text from a web page or an entire website based on the provided URL.

Best Practices

- Optimize Scraping Performance: Fine-tune the scraper to ensure efficient web scraping, minimizing resource consumption and maximizing performance.

- Error Handling: Implement robust error handling mechanisms to gracefully handle errors encountered during scraping.

- Respect Website Policies: Ensure that your scraping activities comply with the website's terms of service and robots.txt file.

- Security: Securely manage and protect any sensitive information used during scraping.

Conclusion

In conclusion, the Web Scraper offers a powerful solution for extracting text from web pages or entire websites. By leveraging the capabilities of the Web Scraper, developers can build sophisticated scraping workflows to streamline data extraction and improve productivity. With proper configuration and utilization of the Web Scraper, users can harness the full potential of web scraping to extract valuable insights from the web effectively.